| Type | GPU (Graphics Processing Unit) |

| Model | NVIDIA H100 |

| Form Factor | Full Height/Full Length (FHFL) |

| GPU Architecture | NVIDIA Hopper |

| Power Consumption | 350W |

| Slot Occupancy | Dual-slot |

| Thermal Solution | Passive |

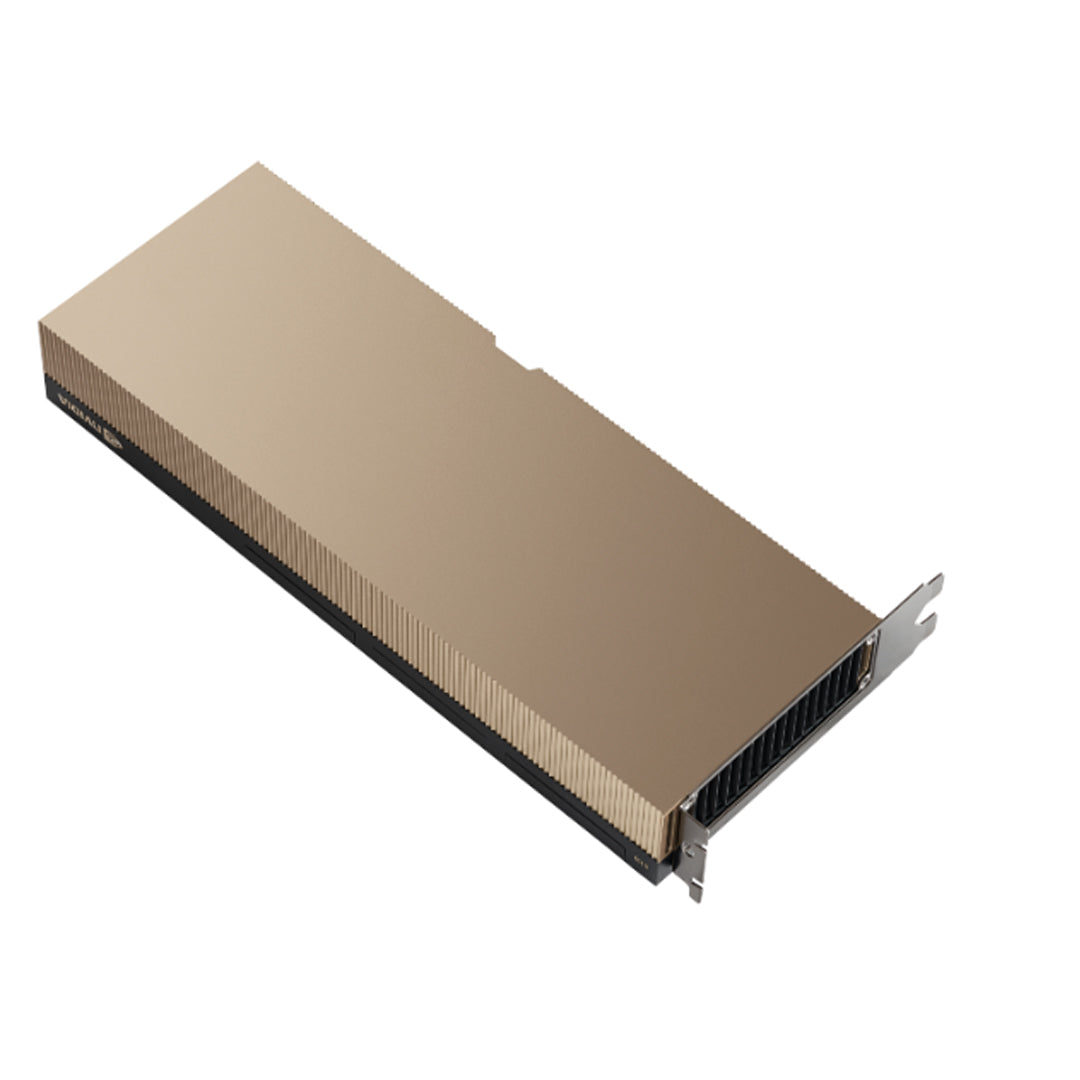

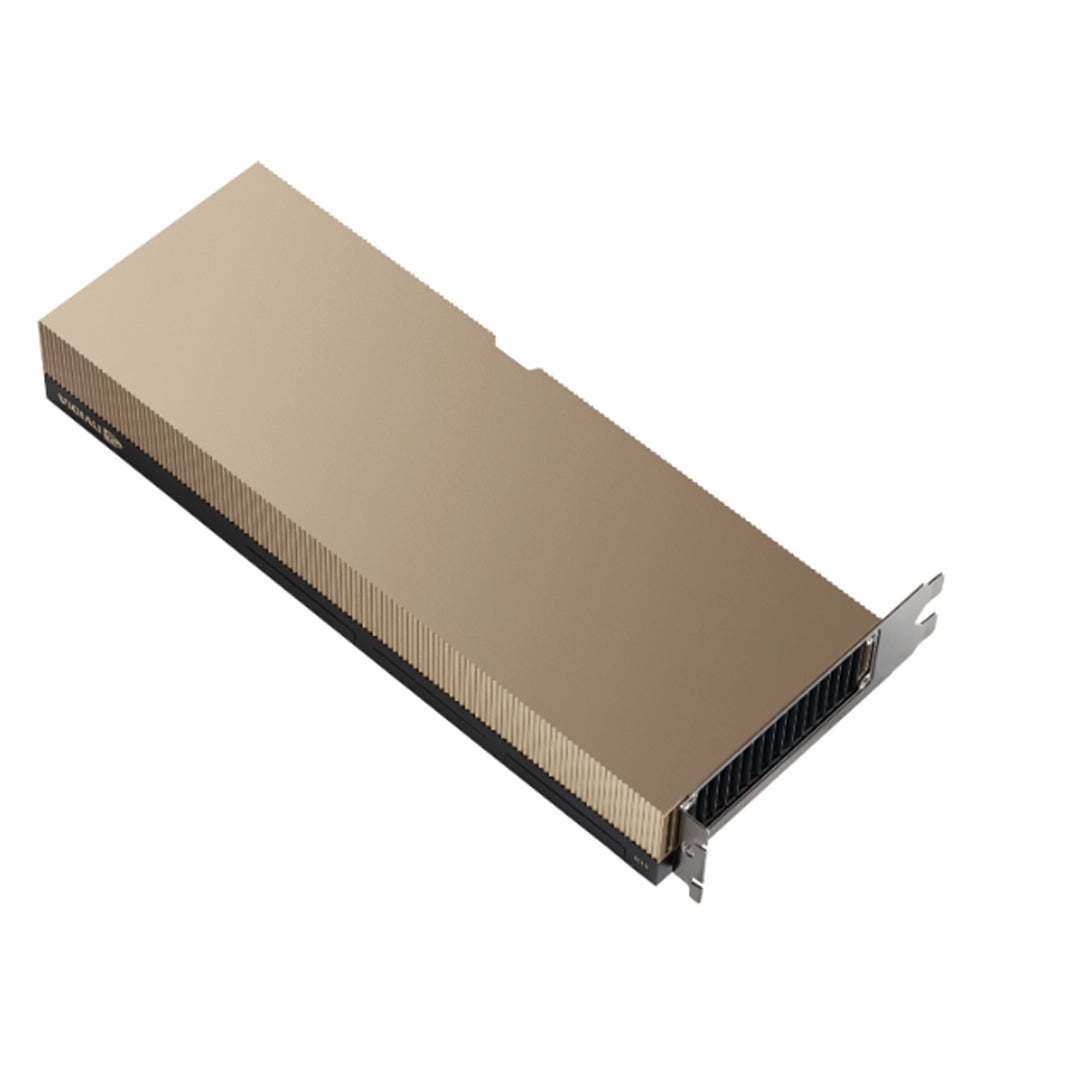

Cisco NVIDIA H100 80GB FHFL GPU, 350W Passive PCIe

Key Benefits

AI-Powered Summaries from Online Customer Reviews

Eye-Insights™ are generated by proprietary AI that analyzes real online customer reviews to highlight top pros and key product features. While we aim for accuracy, insights are provided “as-is” and individual results may vary.

- Delivers exceptional AI and HPC acceleration with 80GB HBM2e and 350W power architecture.

- Fully passive thermal design ensures compatibility with high-density data center deployments.

- TAA compliant, making it suitable for government and regulated industry installations.

Product Overview

The Cisco NVIDIA H100 is a high-performance 2-slot Full Height/Full Length (FHFL) graphics card engineered for demanding AI, HPC, and enterprise workloads. With its 350W power draw and passive thermal design, it is optimized for integration into large-scale data center environments requiring consistent thermal efficiency and high computational throughput.

Featuring 80GB of HBM2e video memory operating at a clock speed of 1593 MHz and across a 5120-bit data interface, this GPU provides massive memory bandwidth for large-scale processing tasks. The H100 graphics controller is built on NVIDIA’s latest acceleration architecture, ensuring compatibility with modern PCI Express infrastructures. Its TAA-compliant design indicates it's approved for government and regulated sector applications.

Ideal for machine learning, AI inference, data analytics, and virtualized environments, this PCIe-based solution increases workload performance while maintaining serviceability within dense compute environments. Manufactured in Taiwan, the GPU reflects Cisco’s commitment to quality and scalability for enterprise-grade solutions.

Specifications

Product Overview

Memory

| Memory Type | HBM2e |

| Installed Video Memory | 80 GB |

| Memory Speed | 1593 MHz |

| Memory Interface Width | 5120-bit |

| Maximum Memory Bandwidth | 2.0 TB/s |

Compliance & Origin

| Country of Origin | Taiwan |

| TAA Compliant | Yes |

| RoHS Compliance | Yes |

| ECC Memory Support | Yes |

| REACH Compliance | Yes |

Advanced Security Features

| Secure Boot | Yes |

| Firmware Signing | Yes |

| SBOM (Software Bill of Materials) | Supported |

| NVIDIA Confidential Computing | Supported |

Interfaces

| Interface Type | PCI Express 5.0 x16 |

| Compatible Slot | PCIe Gen5 x16 |

| Bandwidth (per direction) | 64 GB/s |

| Total Bi-directional Bandwidth | 128 GB/s |

Physical & Environmental

| Cooling | Passive (requires chassis airflow) |

| Form Factor Detail | FHFL (Full Height, Full Length) |

| Slot Requirement | 2 PCIe slots |

| Operating Temperature Range | 0°C to 55°C |

| Non-Operating Temperature Range | -40°C to 75°C |

| Operating Humidity | 10% to 90% (non-condensing) |

| Non-Operating Humidity | 5% to 95% (non-condensing) |

Cloud Management & Licensing

| Management Interface | NVIDIA GPU Operator, NVIDIA NGC (for containerized workflows) |

| License Model | Requires separate NVIDIA Enterprise software licensing |

| Included Software Features | Support for NVIDIA AI Enterprise Suite (license required) |